- SEO

SEO Agency

Take advantage of the first traffic acquisition lever by entrusting your SEO to a digital agency experienced in SEO. - SEA

SEA Agency

Grow your business quickly with paid search (SEA).

- Social Ads

Social ads

Optimize your social media advertising campaigns to improve your performance.TikTok adsGo viral with your ads on TikTok

- Agency

The agency

Keyweo was born from the desire to create an agency that meets the principles of transparency, trust, experience and performance.

- Blog

- Contact

Google indexing

Home > SEO Agency > SEO Glossary > Google indexing

Definition

The Google index is a large directory containing all the pages that are listed on Google as soon as a search query is made. This allows Google to sort the pages and then display them in the SERP. The index is therefore a large database with billions of pages that the Google algorithm uses to offer users the best possible results for their search queries. However, caution is advised when using the terms ranking and Google indexing. A further distinction is made here.

Quick access

- What is the Googlebot?

- Indexing and ranking

- Page indexed?

- How does Google index?

- Page not indexed - What to do?

- Internal linking

- Nofollow-Tag

- Revise linking

- Poor content

- Set backlink

- When to prevent indexing?

- How does that work?

- Crawl-Block

- Noindex-Tag

- Password protection

- Page deleted from index

- Conclusion

What is a Googlebot, and what does it have to do with Google indexing?

The Googlebot is a web crawler that crawls all pages and sends them to the index. These crawlers can be thought of as small spiders that work their way from link to link and weave a large web of websites. If the Googlebot finds a link on a website to an external website, a crawler follows the external website until it encounters a new link there.

A distinction is made between the Googlebot for computers and for smartphones. As soon as the Googlebot has visited a new page, it records the data. This can then be indexed. As soon as the page and its data are in the index, it can be displayed to users in search queries.

As you can see, it is essential to be included in the index in order to be displayed in the search engine results pages. According to Google, the Googlebot accesses a website every few seconds and tries to crawl as many pages as possible in the process.

What is the difference between ranking and Google indexing?

Once your page has made it into the index, this does not mean that you automatically have a high PageRank. A clear distinction is made here between indexing and ranking. The fact that your site is in the index means that you are in the big race for the best positions in the search engine results. If you want to win, even more is required.

For example, the content on the page and its relevance for users plays almost the most decisive role. Just like a good link profile. Google takes a close look at which pages link to yours and thus gives your page more or less trust, which subsequently affects your ranking. However, exactly which metrics Google uses for its rankings is a secret. SEO experts such as those at Keyweo use their many years of experience in a wide range of application areas to bring their customers to the top of the SERP with the most proven strategies.

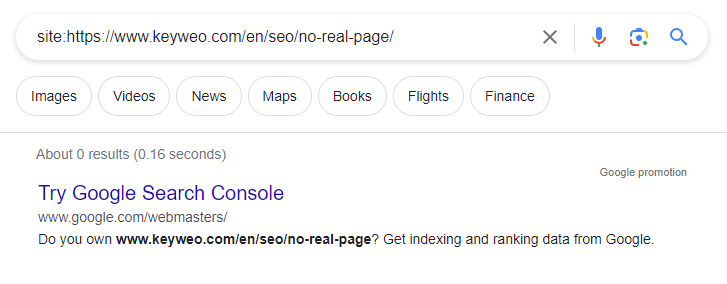

How do I find out that my site is indexed by Google?

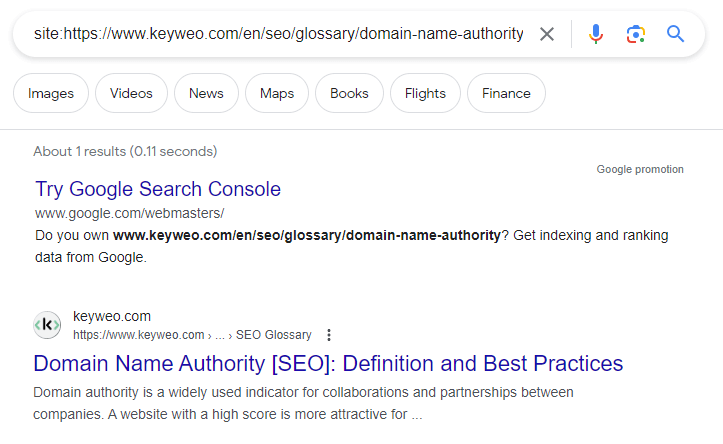

Don’t know if one of your pages is in the index? Well, there is a very simple way to find out. All you have to do is enter the query site: with the respective URL in the search line. This will instruct Google to only search for the exact URL. If your query has no results, the page is not in the index. A small example:

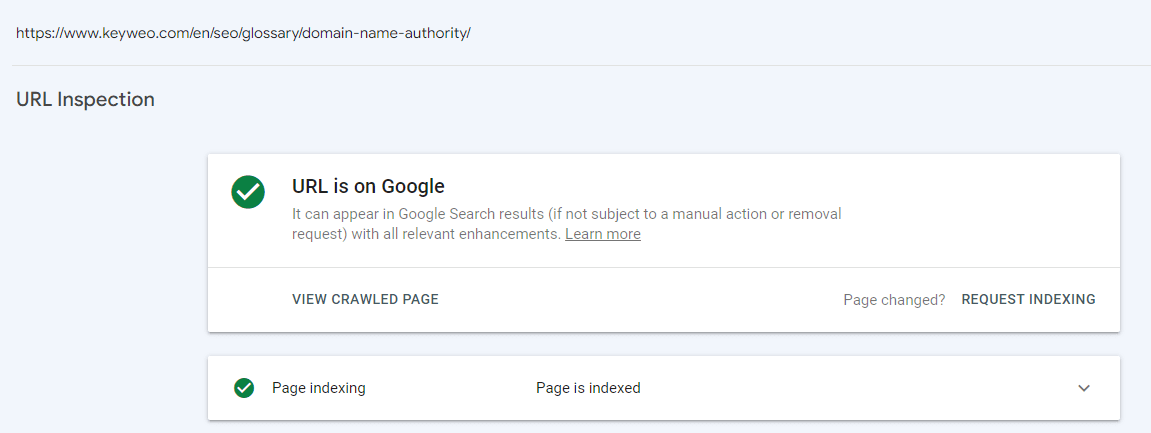

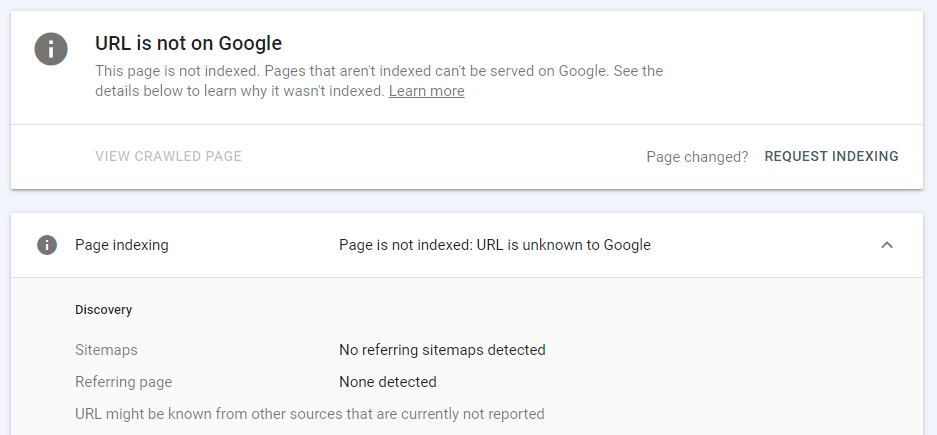

Another way to find out if your URL is in the Google index is by checking your Google Search Console. Here you will find all the information you need for each page, as well as information about its Google indexing.

How can I get Google to index my pages?

As already mentioned, new pages on your website are usually indexed automatically by the Google crawlers. It is therefore important that you include a new page on your website in your internal linking so that the crawlers can also find the new page.

Remember that every page has a certain crawl budget. If this is used up, no new pages will be crawled for the time being. So if you want to use this budget as sparingly as possible, it is advisable to reduce loading times on your website and avoid technical errors. The deeper the page is in your website, the longer it can take for it to be reached with the crawl budget. A page that is linked directly from the homepage is therefore crawled more easily than a page that is only found after many clicks.

It can take a few days or even weeks from crawling to indexing. However, it is also possible to trigger Google indexing artificially. Anyone who has just published a new website or made major changes to their own website has the option of submitting their sitemap to Google. This sitemap contains all the URLs that can also be found on your website. You can use this method to force faster indexing.

However, you can also have individual URLs indexed directly by Google. You can also do this in your Google Search Console by entering the desired URL in the search bar. If your URL is not in the index, you can request this from Google under “Request indexing”. But be careful! Here, you only have a certain quota of requests that you can make. If you often have the problem that your pages are not indexed at all or only very slowly, you should rather get to the bottom of the problem.

Why are my pages not being indexed by Google?

If you see that your newly added pages are still not in the index after a few weeks, you should check this. There are a number of possibilities as to why they have not yet been indexed by Google.

Page is not in the internal linking

It is possible that you have created a so-called orphan page by forgetting to include your newly added page in the internal linking of your website. If no link on your website leads to your new page, Google crawlers and users have no way of reaching your page. It is then an “orphan page”. To find orphan pages on your site, there are several tools, such as Screaming Frog, which help you to identify these pages. Once these pages are known, you can then include them in the internal linking of your website.

It is also advisable to include the page in the sitemap. The sitemap helps Google to find and index pages more quickly. You can find it by searching for your URL and add a /sitemap.xml. This should contain all the pages on your website. Most sitemaps are automatically updated by plug-ins in the CMS system. If you do not have such a plug-in, you should add new pages to the sitemap manually. You can also find out whether a page that you have newly created is in the sitemap in your Google Search Console. To do this, you can search for the URL and find the relevant information under Sitemap.

A nofollow link was set by mistake

In this case, your page is in your internal linking, but has been given a nofollow tag. In this case, it makes no difference to the user, as they can click on the link as normal and are redirected to the next page. For Googlebot, however, a nofollow tag indicates that the linked page should not be followed by Google crawlers. This means that this page is still not visited, which makes Google indexing impossible.

Revise the links

You have made sure that your page is found in the internal linking with a dofollow link and yet your page is not indexed? Then it could be that the links you have on your site so far are too weak.

As already mentioned, pages that are linked from the homepage have a much higher chance of being crawled than those that can only be reached in many clicks. So if you notice that your pages are being indexed very slowly or not at all, it may be that your website structure is too deep and the links that have already been set are not strong enough. Before the Googlebot can reach your site, the crawl budget has already been used up. For this reason, it is advisable to place a link on a page that is closer to the homepage in order to increase the probability of Google indexing.

But of course you can’t link to every new page you create on your homepage. There are some other strong pages on your website that could be interesting. To find out what these are, you can use the Ahrefs Site Explorer, for example.

Your site has poor content

Google’s aim is to show its users high-quality pages that provide users with quick, clear answers to their search queries. If you have created a new page that contains hardly any helpful information and provides inadequate answers for the user, this may be a reason for Google not to index your page. So take another look at the content on your page and ask yourself the following questions:

- Does the text answer the user’s questions? Tip: Enter search queries that match your page into Google and take a look at the similar questions from Google. Have you answered all of them in your text?

- Does the text comprehensively cover the topic described? Tip: Use tools such as Thruu to find out how much text your page should contain and what topics your competitors are covering.

- Is the content presented clearly and concisely? Tip: Use key points, thick markers, images and videos to make your content more appealing and informative.

- Does the content already exist on your site? Duplicate content should be avoided at all costs. If you have simply copied a text from another page, for example, you should definitely rectify this!

Set backlinks

You have already tried everything and your page is still not in the index? Then, you also have the option of adding a backlink from an external website to your page to benefit from link juice and increase the chances of your page being crawled. Because when a backlink is placed, Google attributes more trust and popularity to the linked website. You have to imagine it like this:

Max & Sarah are talking about a vacation. Max would like to spend this year’s vacation in Greece. Sarah recommends a hotel in Crete. As a result of the recommendation, Max immediately has more trust in the hotel in Crete that was recommended to him, in contrast to other hotels.

And Google works in a similar way. Let’s assume that Sarah in this case is a travel blog that links to the hotel in Crete. Google, in our example Max, would see that the hotel is of particular relevance and accordingly classifies this page as more valuable than before.

So if your website frequently has problems with new pages not being indexed at all or only very slowly, it is helpful to direct a powerful backlink from another page to your own.

When should I exclude a page from Google indexing?

Of course, you can also ask Google not to index some pages. There are several reasons why this may make sense.

- The page has not yet been completed and is currently being revised. (e.g. a page for the upcoming Christmas sale)

- The page should only be accessible to certain people and therefore not be found in the SERP. (e.g. a page only for club members, employees, etc.)

- The page should only be visible if users have completed a conversion on the page (e.g. shopping cart, thank you pages, etc.)

- Pages that do not offer users any added value but still need to be on the website (e.g. legal information, privacy policy, etc.)

Basically, all pages that do not provide users with much information without context should not be in the index. This will only reduce your crawl budget and result in really relevant pages being indexed more slowly. It may therefore also be an option to look at all indexed pages on your website and perhaps minimize the number. You can then remove pages that are not highly relevant for users from the index.

How can you exclude pages from the Google index?

There are several ways to prohibit Google from indexing a specific URL. Here are the most popular options:

Set a crawl block

So-called crawl blocks prevent crawlers from visiting your entire website, entire categories, or even individual pages. These crawl blocks usually look like this:

User-agent: Googlebot

Disallow: /

User-agent: *

Disallow: /

These crawl blocks can be added to the robots.txt file using this code. If an entire website is blocked, you will only see the slash symbol. If only individual URLs are blocked, you can type the URL in under Disallow: /no-real-page. This will prevent Google from crawling this specific page.

However, you can also exclude entire categories by specifying the category there.

Noindex tag prevents Google indexing

If you do not want your page to be indexed, you can set a noindex tag to prevent this. These noindex tags can be found in the HTML code and look like this:

<meta name=’robots’ content=’noindex’

<meta name=’googlebot’ content=’noindex’

This means that you can also tell Googlebot via the HTML code if you do not want a page on your website to be indexed.

Protect certain areas with a password

Especially if you want to make certain areas of your website accessible only to your employees or only to club members etc., you also have the option of protecting certain pages with a password using various methods. This is also useful if your content can already be found in Google searches, and you would now like to protect it.

Pages that have been deleted from the Google index

Sometimes it happens that Google deletes pages from the index after they have already been indexed. If this affects pages that have previously generated high conversions for you, this is a danger for your business and should be analyzed in detail.

Most of the time, this is the result of black-hat SEO strategies that have been practiced on your site. Here are some practices that could lead to removal from the Google index:

- dubious link profile

- Overly optimized anchor

- texts

- keyword stuffing

- Duplicate content

- cloaking

- Doorway pages

- and much more

If you want to be sure that Google will keep your site in the index, you should achieve honorable rankings in the SERPs with high-quality, clear content that offers users added value. Black-hat SEO strategies should have no place there. Although they seem to promise fast and successful results in the SERPs, these strategies only lead to penalties and traffic losses in the long term.

However, if it has happened that Google has removed your pages from the index, you can find a note about this in your Google Search Console. There, Google will give you information about what could have led to your page being deleted from the index. Take a close look and revise the affected page. You can then request a reconsideration process in the Google Search Console. Find out more in our glossary entry on the topic of Google Penalty.

Conclusion

The way Google works is complex and sometimes very difficult to understand. But you can also take advantage of this and benefit from it. Google usually indexes your site automatically. With a little patience and high-quality content, you should have no problems with your pages being found in the index after a while. However, if you do have problems, you can use a few tricks to make it easier for Google to crawl your website and speed up Google indexing.

Make sure you keep your website structure as simple as possible, update the sitemap regularly and build your internal links strategically. Then you should have no more problems with Google not indexing your website in the future.

The most popular definitions

alias page

google amp page

doorway page

orphan page

satellite page

zombie page

pagination in SEO

footer

header

sitemap

Google indexing

Boost your Visibility

Do not hesitate to contact us for a free personalised quote

Notez ce page